The Crucial Role of Data Architecture in Asset Management

The Crucial Role of Data Architecture in Asset Management

Data is the lifeblood of any financial institution. On the buy-side, it is perhaps the single greatest asset in a firm’s inventory. Yet, despite this, managers too often lack a sound data strategy and architecture to effectively leverage this asset. Beyond the clear operational risks and inefficiencies, the lack of a data architecture also heavily weighs on a firm’s ability to adopt new transformative technologies, like automation, tokenization, and AI, all of which are increasingly important in the industry.

Interestingly, this is not inherently a function of scale or financial product complexity, as the problem can plague large and small managers alike across multiple investment disciplines and strategies.

Given the data-rich environment in which we now operate, however, a robust data architecture is one of the most critical technology investments an asset manager can make. In its absence, managers risk drowning in a data deluge, hindering their ability to respond swiftly to market dynamics, pursue new investment opportunities, or to remain competitive with more technically proficient peers and fintechs.

WHY IS A DATA ARCHITECTURE SO ELUSIVE?

So, why is a data architecture so elusive for many buy-side institutions?

For one, the prevalence of legacy systems and processes that have accumulated and grown organically over time remains a significant hurdle to overcome. Asset management firms often rely on outdated and disparate systems that were not designed to handle the complex and diverse investment products, strategies, and data requirements of today’s financial landscape. This legacy infrastructure can lead to data silos, inconsistent data quality, and fragmented data access, hindering effective data management.

Additionally, many managers face resource constraints and budget limitations, making it challenging to invest in and implement a modern data architecture. This can be especially daunting for organizations already grappling with tight budgets and other operational priorities. For established firms, in fact, it’s not uncommon to find over 70% of IT budget allocated to business-as-usual (BAU) tasks, leaving little remaining for more strategic and discretionary initiatives. Talent may also be matched to such a technology portfolio, with skills more closely aligned to maintenance efforts required by legacy infrastructure, platforms, and applications instead of innovation and change management.

In some organizations, data ownership and governance responsibilities are not well-defined or enforced. For example, I’ve observed some managers attribute data ownership and governance to the technology organization, when the responsibility should instead sit squarely with business users. Organizational culture can therefore also impact the success or failure of data quality, governance, and controls. Have you ever seen an employee performance evaluation rate data stewardship? One shop even argued that its portfolio managers were too important to be bothered with the quality or completeness of deal-driven data associated with their bank debt and distressed transactions. You can imagine what that data platform looked like!

Finally, there may be a lack of awareness or understanding of the critical role that data architecture plays in asset management. Such an endeavor requires a strategic vision as to how enhanced data capabilities can contribute to the growth and operability of the organization.

Some firms may underestimate the impact of poor data architecture on their investment processes and overall competitiveness, which can lead to complacency in addressing these issues. They may also mistakenly believe that individual application endpoints or databases satisfy the data architecture when nothing could be further from the truth.

THE BASICS

At its core, a well-structured data architecture is the bedrock of an asset manager’s operations, empowering them to navigate the intricacies of the financial markets with efficiency, accuracy, and compliance. It offers a systematic framework to manage and deliver data, ensuring quick access and retrieval across the enterprise. The architecture should help to ensure that data is reliable, accurate, complete, and auditable. It influences data quality, reduces operational risk, and underpins a manager’s front-to-back capabilities, including for research, investments, operations, risk management, accounting, regulatory, and client service.

The data architecture may incorporate multiple data services and repositories, each one fit for purpose for its target use case but organized logically as part of a cohesive whole. Beyond the data itself, the architecture supports application and discovery interfaces, data ingestion, orchestration, integration, pipeline processing, elasticity, performance, transformations, governance, management, resiliency, security, and cost optimization, amongst other considerations.

Lastly, the data architecture empowers asset managers to harness the benefits of technological advancements and innovation. In the fast-evolving landscape of finance, asset managers who adopt cutting-edge technologies, like AI and machine learning, gain a competitive edge. A sound data architecture provides the flexibility and agility needed to leverage these technologies effectively, enhancing investment decisions, identifying market opportunities, and streamlining operational processes. It is the foundation upon which innovation thrives, ensuring that asset managers remain at the forefront of the industry’s transformative journey.

THE SHAPE OF DATA

Typically referred to as the “5 Vs” amongst data scientists, data is often characterized along the vectors of volume, velocity, variety, veracity, and value. I also like to introduce “venue” as another important characteristic, since the source of data can dictate its availability and use, along with more practical considerations like licensing and distribution. Collectively, these “Vs” help data professionals and organizations make informed decisions about data utility, storage, delivery, processing, governance, and analysis strategies.

Most asset managers typically rely on a diverse range of data types to make informed investment decisions and manage portfolios effectively. These data types can be broadly categorized into two main groups: quantitative and qualitative data.

Quantitative data includes numerical, measurable information such as transactions, positions, market prices, financial statements, trading volumes, economic indicators, and historical performance data. Some reference data can also incorporate both quantitative and qualitative elements, as would be found in a security master or within deal covenants. This data usually lends itself well to tabular, relational, or columnar database structures. Asset managers heavily rely on quantitative data to assess the value, cashflows, performance, and potential risks associated with investments.

Qualitative data, on the other hand, provides context. It typically encompasses non-numeric information, including certain reference data, news articles, research reports, company news, industry trends, geopolitical events, and investor sentiment. Qualitative data is especially valuable for understanding specific deal and issuer dynamics, industry sectors, the macro environment, external events, and market sentiment, amongst other things. It can be used to help formulate and support an investment thesis, and to curate investment signals. Most managers, including those pursuing systematic or fundamental strategies, use a combination of quantitative and qualitative data to derive a holistic view of the investment landscape, make informed decisions, and construct well-balanced portfolios that align with their stated investment objectives and risk tolerances.

Additionally, the availability and ingestion of alternative, often unstructured data has brought enhanced insights to traditional investment analyses. Such data sets are typically qualitative in nature, but can also span the quantitative category. Often referred to as “big data”, this data is better suited to cloud-based object storage. By harnessing such data from traditional media, web content, sensor feeds, social media, satellite imagery, audio, or credit card transactions, for example, asset managers can gain a more comprehensive and contemporaneous view of market dynamics, and a deeper understanding of specific industry sectors, market regimes, and companies.

The inclusion of these sources can lead to more robust analytical models and risk management, enhancing the ability to identify investment opportunities, forecast potential market catalysts, and assess the vulnerability of portfolios to various market events. For instance, satellite data can offer valuable insights into the performance of retail businesses or commercial real estate by tracking foot traffic, parking lot congestion, or monitoring shipping activity. Similarly, web scraping can help to identify consumer behaviors and trends that may affect certain sectors.

Collectively, these empirical observations facilitate more data-driven investment decisions. This allows managers to identify emerging trends, market transitions, and early indicators of performance, all of which play pivotal roles in the construction and ongoing management of well-informed investment strategies. These data sets, too, are also increasingly used to train AI models that leverage natural language processing, generative AI, speech recognition, and computer vision.

DATA ARCHITECTURE AS THE PREREQUISITE FOR AI

AI is all about the data. In the simplest terms, an AI model leverages a statistical representation of the data corpus against which a model has been trained. Most off-the-shelf foundational models, including large language models (LLMs), are trained against a generic corpus. While these models provide incredible breadth of coverage and a strong language foundation, optimizing their performance to incorporate deep vertical domain and institutional expertise requires the use of data-centric strategies such as fine-tuning, plugins, and prompt engineering.

In previous iterations, model-centricity was the primary focus of AI development and fine-tuning. While still dependent on a data corpus, model-centric development largely focuses on architecture, feature engineering, algorithms, and parameterization. Data, in this case, is used to “fit” the model, but is otherwise a static artifact. Even outside of AI, model-centric development should be familiar to those in finance, since it has been used for decades to derive and support various quantitative analytics used for valuation, structuring, and risk management.

In the field of AI, however, the output of these efforts has produced powerful neural networks, reinforcement learning models, speech recognition, transformer architectures, and other innovations. As the capabilities of these architectures have greatly improved, powered in large part by the nearly ubiquitous availability of high-speed networks, cloud compute, and storage, additional model-centric enhancements tend to yield only incremental gains for all but the largest of organizations.

This has led to a marked shift towards more data-centric AI pursuits which emphasize the iterative and collaborative processes of building AI systems through data. The approach recognizes that data is a dynamic and essential part of the overall AI development process. It allows for collaboration with subject matter experts and the incorporation of their knowledge into the model. This ultimately drives higher model accuracy compared to the use of model-centric methods, alone. And, as AI models have become more complex and require larger or more domain-specific training datasets, a sound data architecture is a critical prerequisite to enable curation, annotation, and iteration, while also ensuring scalability, governance, access, and security.

PATTERNS AND ANTI-PATTERNS

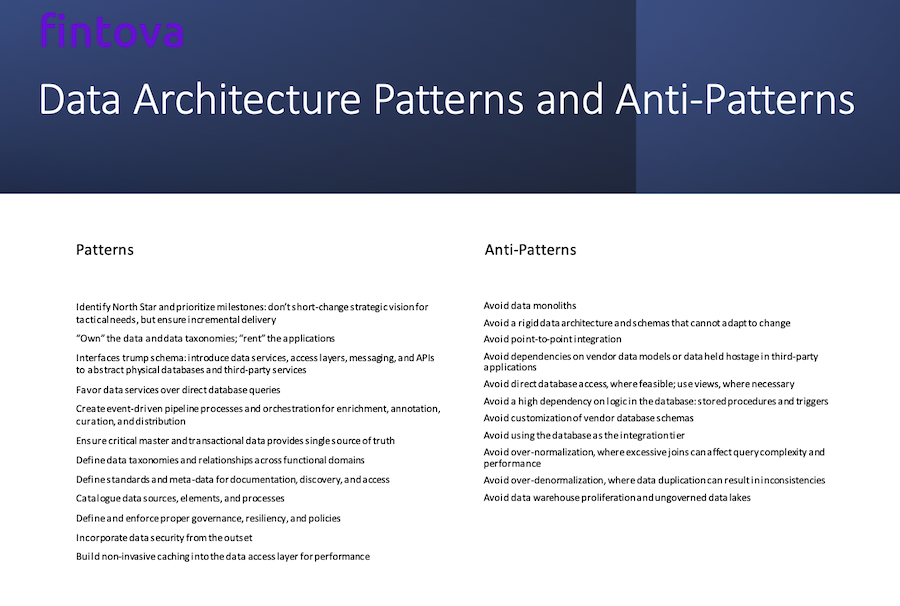

In my experience, there are some fundamental technical patterns and anti-patterns to consider as best practices (or avoidable practices) when designing a data architecture (Table 1).

Not to diminish the value of a strong data schema, but interfaces, services, and access layers are far more important. It’s advisable to separate data and logic, and equally advisable to abstract physical database infrastructure, where feasible. Avoid database and vendor lock-in, where possible. Underlying data repositories and schemas can and do change, but data interfaces and access should be standardized and designed for business use, first and foremost.

Indeed, as data is increasingly pushed to the edge where more technically adept users can leverage it in models, Python scripts, spreadsheets, or other end-user applications, providing secure, discoverable, and intuitive access to enterprise data assets is especially useful. An analogy I frequently use is one of an electrical wall socket. The socket provides a standard receptacle and power supply to many different electrical devices that service a range of applications. A data architecture should provide a similar conduit.

Data pipelines and orchestration coordinate the movement of data between various components of the architecture, ensuring data flows smoothly from ingestion to consumption, and is enriched and catalogued along the way.

If properly architected, a data platform will provide quick, extensible, and secure access to key enterprise data assets.

OF WAREHOUSES AND LAKES

Asset managers typically utilize a variety of data repositories, including from both self-managed and third-party applications and services. Market data vendors offer reference data, prices, credit ratings, analytics, and news. Some, like Bloomberg, also offer portfolio management and trading applications atop their extensive data inventories, though with functional and cost constraints that may not make sense for many managers. Other vendors, administrators, and custodians provide OMS and EMS capabilities, CRM functionality, portfolio accounting, risk analytics, cash management, settlements, and other capabilities. Managers may develop and manage their own proprietary applications and data assets, as well. With data originating from, or localized across so many endpoints, the data architecture must seek to deliver order and consistency from an otherwise fragmented landscape.

Data warehouses and data lakes have become a common mechanism through which to fulfill this requirement by pooling together data from various sources. Hardly a panacea, they offer both advantages and disadvantages. Warehouses are well-suited to structured and semi-structured data and can help to simplify traditional reporting and analysis, while lakes better support the massive amounts of unstructured, alternative data sets currently available. Both are essentially data replicates that house copies of source data to achieve centralization for ease of use, management, and performance. They are equally susceptible to ETL complexity, data latency, and high costs. Warehouses present additional schema rigidity that can impact change management, especially in the absence of logical interfaces. Moreover, without proper architecture and governance, these data marts can degrade into bespoke silos.

Data lakes, in particular, present additional challenges and complexities. They can quickly become dumping grounds – swamps, as some suggest – as organizations attempt to centralize diverse data sets that are inherently fragmented, often ungoverned, and lack suitable interfaces to deliver data in a usable business context. In some cases, the data lake becomes little more than an expensive triage to coalesce data proliferation and kick the can down the road, instead of developing a more comprehensive, strategic data architecture.

FABRIC OR MESH?

The data fabric and data mesh are two distinct architectural paradigms in the domain of data management, each with its unique characteristics and objectives. Both seek to abstract the underlying data infrastructure to create a logical representation of the overall data estate. Both also catalogue data assets to provide search, discovery, and access. While warehouses and lakes may certainly be important parts of the ecosystem, there is no dependency on the types of databases or data services that can be represented. The difference between the two approaches largely comes down to the management and governance. The data fabric is centralized, whereas the data mesh is decentralized. Because of this, organizational structure and skills may favor one architecture over another.

A data fabric is a unified architecture that provides a cohesive layer for data integration and management, focusing on connecting and centralizing data from diverse sources for easy access and analysis. Data virtualization platforms like Denodo and TIBCO Data Virtualization allow you to access and integrate data from different sources without physically moving it, which may obviate the need for certain warehouses and lakes. Tools like Apache Kafka and Apache Spark Streaming can be used for real-time data integration and processing. Orchestration tools, like Apache Airflow, can help to automate data workflows and pipeline management. The specific tools and technologies can vary, of course, and are primarily based on the organization’s needs and existing infrastructure. The goal is to create a flexible and scalable data architecture that enables seamless data access and integration across the enterprise.

A data mesh, in contrast, is an organizational and architectural construct that decentralizes data ownership, treating data as a product and distributing data responsibilities across different domains or teams. While a data fabric emphasizes centralized integration, a data mesh decentralizes data management, aiming to make data more scalable and domain centric. It promotes a self-service data infrastructure that enables domain-oriented teams to manage their own data products. Centralized utilities and standards still exist, particularly for meta-data management, documentation, data discovery, communication, and security. Here, the goal is to streamline data management and to encourage collaboration between teams without unnecessary centralization, bottlenecks, or data silos.

To implement a data mesh effectively, managers need to adopt modern data technologies and architectures, such as microservices and containerization. Microservices allow for the development of scalable and modular data products, while containerization provides a standardized and portable environment for running data applications while also remaining platform agnostic. Additionally, the use of APIs facilitates the seamless integration of data products and services, enabling asset managers to build a cohesive and adaptable data ecosystem.

CONCLUSION

A sound data architecture for asset managers combines traditional data management practices with emerging design concepts and modern technologies to create a flexible and efficient infrastructure for enterprise data organization, analysis, and utilization. By adopting these principles and technologies, asset managers can navigate the complex data landscape with agility, uncover valuable insights, reduce operational risk, enhance operating leverage, and make well-informed investment decisions while fostering collaboration and innovation within their organizations.

About Author

Gary Maier is Managing Partner and Chief Executive Officer of Fintova Partners, a consultancy specializing in digital transformation and business-technology strategy, architecture, and delivery within financial services. Gary has served as Head of Asset Management Technology at UBS; as Chief Information Officer of Investment Management at BNY Mellon; and as Head of Global Application Engineering at Blackrock. At Blackrock, Gary was instrumental in the original concept, architecture, and development of Aladdin, an industry-leading portfolio management platform. He has additionally served as CTO at several prominent hedge funds and as an advisor to fintech companies.